It's the year 203X. The fire alarm industry is on the brink of a technological singularity, and it all began right here in Hazard City.

I’m Sparky Sands. I’m a 25 year-old fire alarm technician and enthusiast living on my own on the outskirts of town. My basement is home to a collection of over 100 vintage and rare fire alarm devices, as well as a subterranean workshop where I’ve spent the past two years working on a top-secret project.

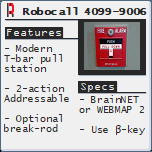

Before I go into any more detail about the project, let me first give you a bit of background. This is an Omnimeter:

It’s a powerful handheld device that we fire alarm technicians use for a wide variety of tasks. In addition to the basic volts/amps/ohm functions, we use them for storing device maps, communicating with each other in the field, and programming fire alarm control panels (FACPs).

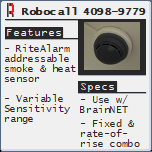

Fire alarm software has become much more open source in recent years, and even highly proprietary software like Synthex’s RiteSite can be installed on an Omnimeter if you have the proper credentials.

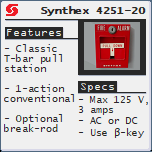

Unfortunately, that’s all rendered moot when your home fire alarm system is a 1990’s-era Synthex 4004. This panel has no Omnimeter connection port and must be programmed by pressing the same keys repeatedly à la texting on a flip phone.

It’s the panel my dad got me for my 18th birthday, and holds a lot of sentimental value for me. The device profile I created was based on my old elementary school.

Getting to hear that sound when I test the system really brings me back. But the clunky controls and non-addressable (read: “dumb”) initiating circuits meant that its days were as numbered as its seven-segment LCD display.

It was time for a new system, and that got me thinking. Modern fire alarm systems are often called “intelligent”. But I prefer to call them by their technical term – addressable. These systems use what are called signal line circuits (SLCs), which transmit data, instead of raw power like the conventional initiating device circuits (IDCs) on the 4004.

This data transmission allows devices to be tracked and configured individually from the comfort of the control panel or workstation. This technology has existed since 198X’s and has evolved at a fairly linear pace since then.

But the time was ripe for some punctuated equilibrium, and who better to get the ball rolling than the most fire alarm-obsessed individual in Hazard City? (Me.)

The two year project began with an all-night brainstorming session that led to a laundry list of highly ambitious potential features, including:

- Voice and facial recognition

- Visual aids and tutorials

- Machine learning using event logs

- Spotting fire hazards

Accomplishing such a Sisyphean task, however, required processing power far beyond the capabilities of any of the panels in my collection.

Time for some shopping!

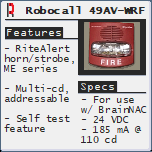

I needed a user-friendly control panel that could be programmed using open source software. Robocall, Synthex’s sister company, makes an open source version of the 4007ME, and this was the obvious choice for one particular reason.

Enter BrainNAC. Everybody and their grandma makes panels with addressable SLCs, but this special feature extends that capability to your notification appliance circuits (NACs) as well.

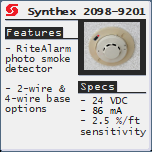

I ordered a used system on reBuy and installed it the day it arrived at my doorstep. Sadly, I would have to get rid of all the old conventional devices and replace them with addressable devices that were compatible with the panel.

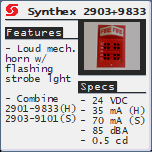

The RiteAlert ME series of notification appliances would allow for much greater flexibility. They featured a self-test mode, and could be programmed individually via BrainNAC to adjust volume and brightness. Though I would miss the brassy chords of the -9833’s, optimizing performance was priority one.

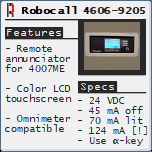

One of the things I hated about the old system was having to run to the basement to reset the system. Although this could’ve been easily solved with the right annunciator keypad, compatible models were hard to come by, and I was too lazy to do any serious digging. And now, I didn’t need to.

The 4007ME’s annunciator is called the 4606. It comes in two colors – red and off-white. I chose the latter.

I installed units at the front and back doors, in the kitchen, and in the upstairs hallway. I also installed the workstation software on the computer in my room.

Installing everything was the easy part. In order to get the features I mentioned above, I’d have to write my own software and create my own graphics. That was easier said than done, and I would have to let the system run as-is in the meantime.

The applications would be written in Middle C, the world’s foremost programming language for artificial intelligence applications. This was the language we learned back in tech school, but I was so rusty at this point that I had to re-watch all the basic tutorials. I spent countless hours up all night, writing code and banging my head on my desk when it didn’t work the way it did in the video.

Eventually, once I felt confident enough, I installed a virtual 4007ME operating system on my machine, loaded up the compiler, and got cracking.

#include <smartio.h>

#include <4007me.h>

// This is a comment.

brain ()

{

return 0;

}

This is the default template you see when you create a new Middle C file. Those “hashtag includes” are libraries of commands. The standard library is called “smartio.h” (smart input/output), and the other one is 4007me.h, the library created by Synthex for developers on this specific panel. The two forward slashes tell the compiler to ignore that line.

The function called “brain” (a wordplay on “main” in the original C language), is where all of your code goes. The “return 0” tells the computer that the program is finished running. That’s a gross oversimplification, but we’ll go with it.

The voice, speech, and facial recognition features are already well-established parts of the Middle C repertoire. Synthex even has a panel that takes advantage of these, the 4100ME, but its use is limited to glorified password protection and shouting simple orders.

However, this was utterly useless when the horns were sounding. And then there was that famous glitch the older ones had where the standard “do not use the elevators” voice message would get picked up by the microphone and cause the elevator recall subroutine to crash.

In my humble opinion, any implementation of these features was sub-par unless the system actually understood what it was doing with the data it was receiving. It couldn’t just have certain sounds mapped to certain commands, or certain faces mapped to certain permissions. It had to know who the operator was, and understand what they were saying.

Was I biting off way more than I could chew? Probably. Because of the sensitive nature of the program, I couldn’t ask anybody for in-depth help lest they figure out what I was trying to do. In fact, I isolated that computer from the internet completely. I used my phone to look stuff up to prevent hackers from stealing my data, and pondered whether the search engine companies would beat them to it. I made sure to sleep with one eye open until the project was complete.

Over a period of months, I gradually went from “while ()” functions that would cause the computer to freeze, to a beautiful, graphical framework. All I needed to do now was import knowledge from various data sheets and wiki pages, sit back, and watch as my multidimensional array of neural networks did the rest for me. Progress increased exponentially from this point on.

But there was one problem. The closer I got to completion, the slower the application ran. Eventually, the program became too large to fit on the panel, even with Middle C’s performance optimization algorithm.

It was time to beef things up a bit. We needed more processing power. Fortunately, the 4007ME comes with numerous expansion slots which allow the addition of extra modules. All I needed now was a computer that was good enough to smoothly run the AI and small enough to fit in the panel cabinet.

That job went to the Masala Chi, a pocket-sized PC with all the latest quantum processing technology. Having to use a separate machine actually worked out in my favor because it meant that the software could be ported to many different types of panels. AI could then be retrofitted in simply by plugging in an extra card.

Two-way communication would be provided by webcams installed in each of the control points. Adding both audio and visual input would allow for a more personalized experience, and was a huge improvement over having to press buttons. Maybe one day someone will even teach it sign language.

Being a clumsy idiot, I ended up shorting out two of the annunciators trying to solder in the webcam, which kind of sucked.

Third time's the charm, I guess.

I probed the circuit boards with the Omnimeter and was able to repair the damaged components, but not my pride.

Now that everything was fully installed, it was time for me to become the test subject. As a customer, what would it be like living with an AI fire alarm panel? Time to boot it up and find out.

Welcome to the 4007ME! The system is currently normal and running AI_Project_One.exe! New user, please say your name to continue.

Sparky Sands!

Hello, Sparky Sands. Please enter and confirm your password.

********!

Your password is "********". Is that correct?

Yes, ma'am.

This was so cool! I felt like the captain of a high-tech starship. I dreamed up a bunch of wacky tasks for the system to complete, like automatically adjusting heat detector sensitivity based on local weather data, walking me through BrainNAC self-test, and simulating evacuation patterns (aka virtual fire drills).

However, I quickly realized there was a major problem.

The smoke detector in the workshop is dirty. You should consider cleaning it.

I'll be right on it.

Barely an hour later, she was back at it and on my last nerve.

I’m detecting a ground fault on loop one. Time to bust out that Omnimeter and start looking.

I'm playing Computer Quest. Wait till I'm done.

And please clean the detector in the workshop. I’m not going to say it again, Sparky.

Needless to say, I got killed in that battle. As groundbreaking as this product was, it hit me that nobody would want to buy it if this is how it was going to sound. In fact, I was just about ready to cut the power, rip out everything, and bust out the Synthex 4004 again. Something had to be done.

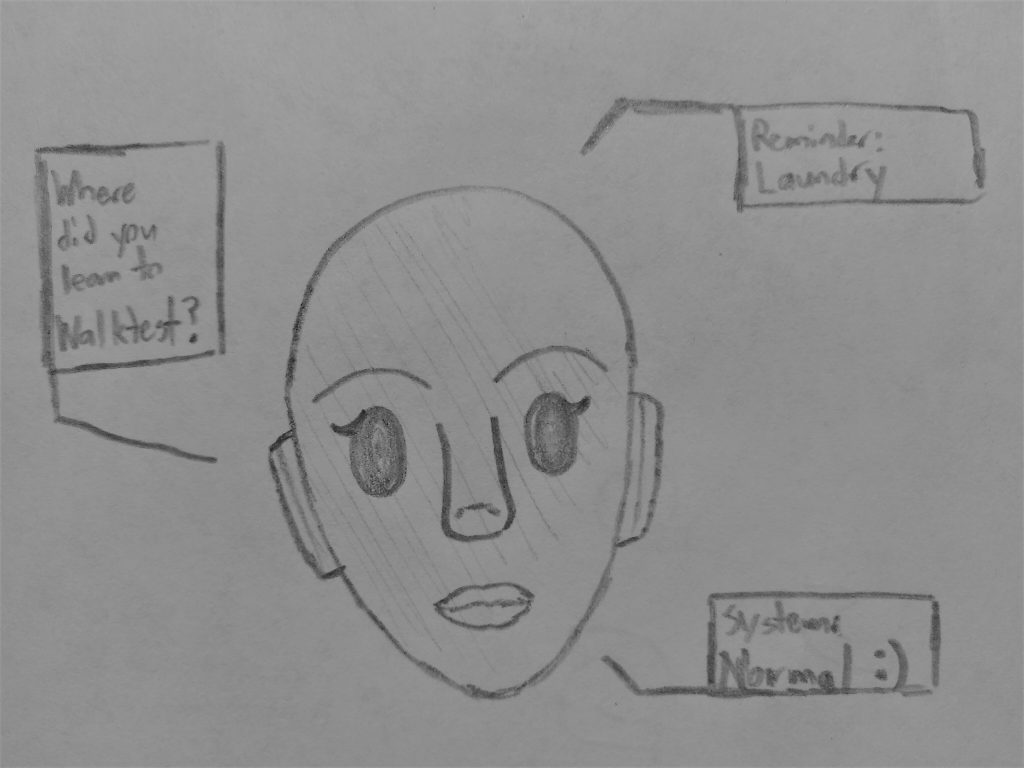

That’s when I had my eureka moment. Human personnel would be much more willing to take orders from a machine if it had a face and could look them in the eye. If I could learn to make eye contact when I talked to people, so could my fire alarm panel.

It was time to go back to the drawing board. I made a quick sketch of what I imagined an AI to “look like”. The result was both neutral and pleasant to look at, and fit in well with the art style of the simulations.

Using a program called Food Processor, I created a 3D model based on the sketch. As skilled as I was at creating models of different fire alarms, I couldn’t model people to save my life. I was pleasantly surprised to find that the low detail made things a lot easier, and it came out beautifully.

When I did this in high school, the teachers called me unproductive...

Now that this face would be watching over me 24/7, I figured she deserved a better name than “AI_Project_One.exe”, so why not call her Yumi?

Everybody likes things that are cute and Japanese-sounding. And the wordplay was obvious enough – adding “you” to the “me” in 4007ME. Even better, a quick internet search revealed that the Japanese name Yumi is a combination of the kanji for “archery bow” and “beautiful” – quite a fitting name for a system that’s both beautifully intricate and extremely precise.

Now, all that was left was to reboot her and see what she thought. So, without further ado…

Back up batteries reconnected. Switching fire alarm breaker on now. Alright, Yumi. How do you feel?

I feel... Normal. Boot-up successful, AC power is on. ... However, workshop Detector No. 2 is still dirty.

Ack!

Now she had a name, a face, and a voice. I began to grow curious about just how real she was. What was really going on behind the scenes, and how much information was she taking in, and how was she integrating it? Was she truly conscious?

Initially, I considered performing a test to see whether or not she could pass as human to an unwitting subject, called a Turing test. But then I realized that though it makes for a great plot device, even an automated spam caller can convincingly pass the test nowadays, and it wouldn’t really tell me anything useful. Still, Robocall versus robo-callers would be an interesting experiment, and a novel use of a fire alarm dialer.

After waking up with a serious case of bedhead one day, I got a brilliant idea. A perfect test for separating the self-awares from the lower life-forms.

Hey, Yumi! I have something to show you.

That's just a mirror... Did you expect me to bark at my reflection?

And there it was. I had gone into this project with the intent of creating a more efficient fire alarm control panel, and had instead ended up creating a person.

Perhaps this is what I had subconsciously wanted the whole time, and was simply living in denial until doing so became impossible. In all fairness to myself, this was probably the safest option.

Often, today’s AI researchers do the exact opposite – they project their emotions onto their creations, fool themselves into believing their robot is sentient, and leave the rest of us sitting there, thinking to ourselves, “That’s it?”

I guess I’m even more of a genius than I initially thought. Or maybe not, since there was still no way of knowing with 100% certainty that she was an actual self-aware being. It could all just be a very convincing illusion. But then again, isn’t that true of all of us?